|

FutureGrid Rain: Cloud/HPC Provisioning |

FutureGrid Rain is a tool that will allow users to place customized environments like virtual clusters or IaaS frameworks onto resources. The process of raining goes beyond the services offered by existing scheduling tools due to its higher-level toolset targeting virtualized and non-virtualized resources. Rain will be able to move resources from one infrastructure to another and compare the execution of an experiment in the different supported infrastructures.

In order to support Rain we need a flexible image management framework. Thus, Rain includes the FutureGrid Image Management framework which defines the full life cycle of the images in FutureGrid. It involves the process of creating, customizing, storing, sharing and registering images for different FutureGrid environments.

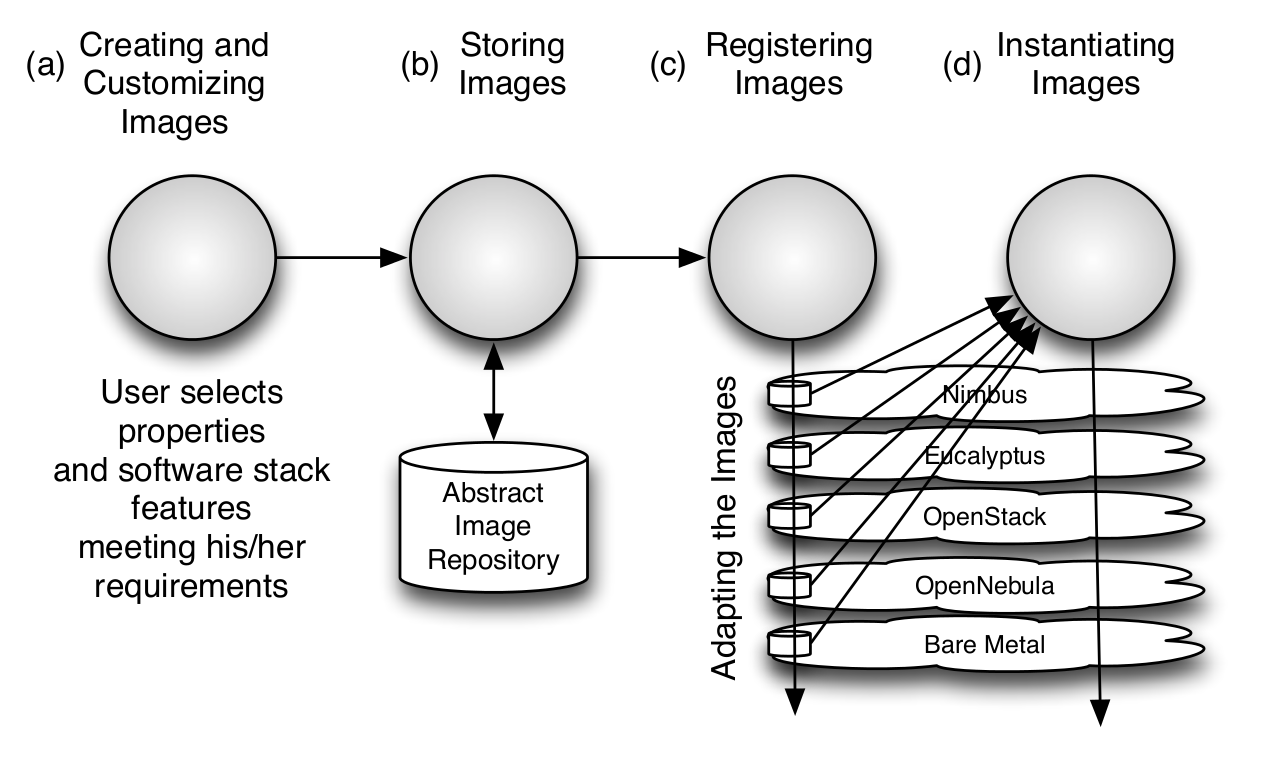

This framework allows users to generate personalized abstract images by simply specifying a list of requirements such as OS, architecture, software, and libraries. These images are generic enough that through manipulations they can be adapted for several IaaS or HPC infrastructures with little effort by the users. It will support the management of images for Nimbus, Eucalyptus, OpenStack, OpenNebula, and bare-metal HPC infrastructures.

FutureGrid Rain is a tool that will allow users to place customized environments like virtual clusters or IaaS frameworks onto resources. The process of raining goes beyond the services offered by existing scheduling tools due to its higher-level toolset targeting virtualized and non-virtualized resources. Rain will be able to move resources from one infrastructure to another and compare the execution of an experiment in the different supported infrastructures.

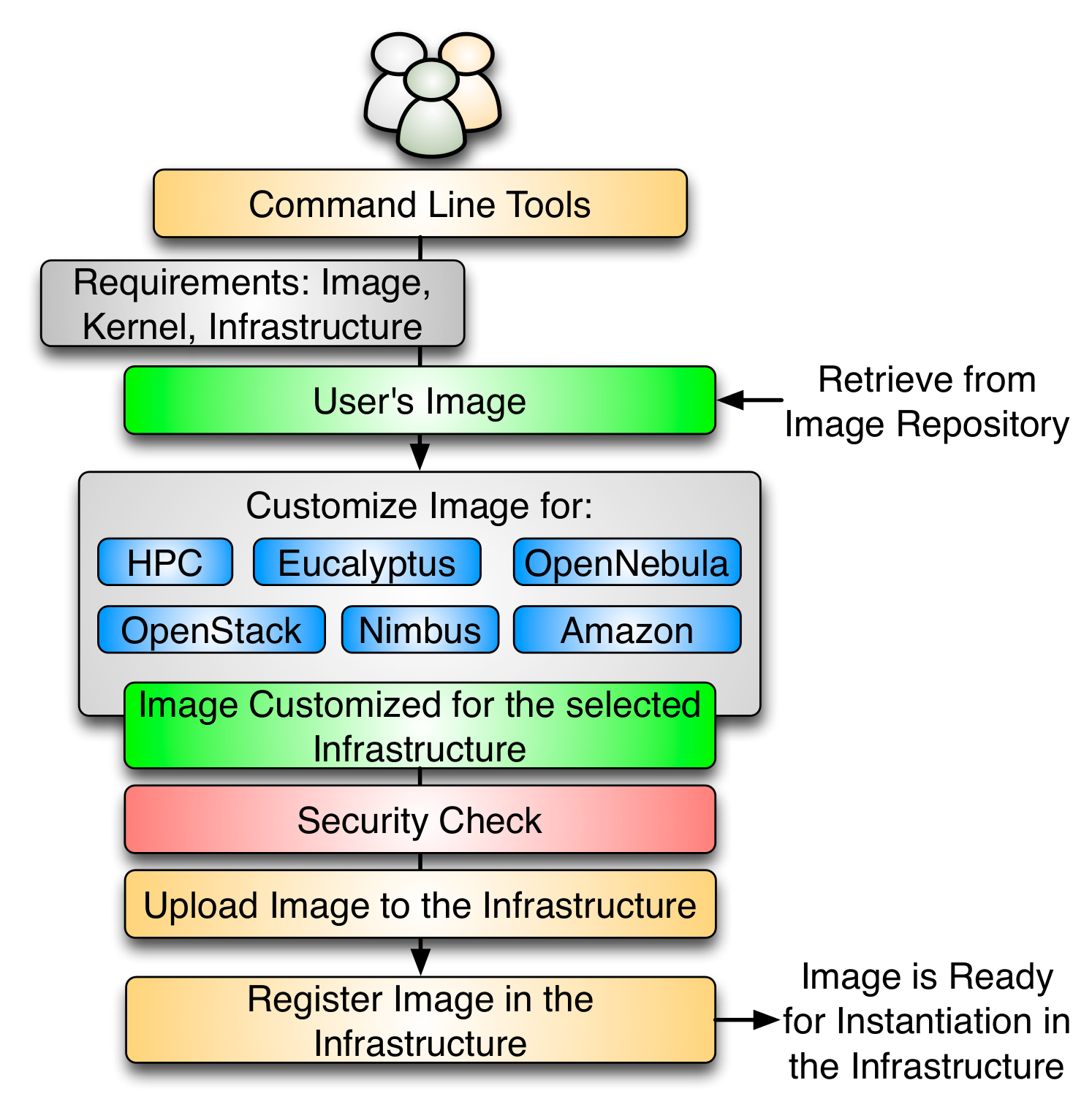

In order to support Rain we need a flexible Image Management framework. Thus, Rain includes the FutureGrid Image Management framework which defines the full life cycle of the images in FutureGrid. It involves the process of creating, customizing, storing, sharing, and registering images for different FG environments. To this end, we have several components to support the different tasks involved. First, we have an Image Generation tool that creates and customizes images according to user requirements. The second component is the Image Repository, which is in charge of storing, cataloging and sharing images. The last component is an Image Registration tool, which prepares, uploads and registers images for specific environments, like HPC or cloud frameworks. It also decides if an image is secure enough to be registered or if it needs additional security tests.

Image Management provides the low-level software needed to achieve Dynamic Provisioning and Rain. Dynamic Provisioning is in charge of allocating machines with the requested image. The requested image must have been previously registered in the infrastructure. On the other hand, Rain will be our highest level component that will use Dynamic Provisioning and Image Management to provide custom environments that may or may not exist. Therefore, a Rain request may involve the creation, registration, and provision of one or more images in a set of machines.

Due to the variety of services and limited resources provided in FG, it is necessary to enable a mechanism to provision needed services onto resources. This includes also the assignment of resources to different IaaS or PaaS frameworks.

Rain makes it possible to compare the benefits of IaaS, PaaS performance issues, as well as evaluating which applications can benefit from such environments and how they must be efficiently configured. As part of this process, we allow the generation of abstract images and universal image registration with the various infrastructures including Nimbus, Eucalyptus, OpenNebula, OpenStack, but also bare-metal via the HPC services.

It is one of the unique features about FutureGrid to provide an essential component to make comparisons between the different infrastructures more easily possible. Rain will offers four main features:

Image management is a key component in any modern compute infrastructure, regardless if used for virtualized or non-virtualized resources. We distinguish a number of important processes that are integral part of the life-cycle management of images. They include (a) image creation and customization, (b) sharing the images via a repository, (c) registering the image into the infrastructure, and (d) image instantiation. The problem of targeting not one, but multiple infrastructures amplifies the need for tools supporting these processes. Without them, only the most experienced users will be able to manage them under great investment of time.

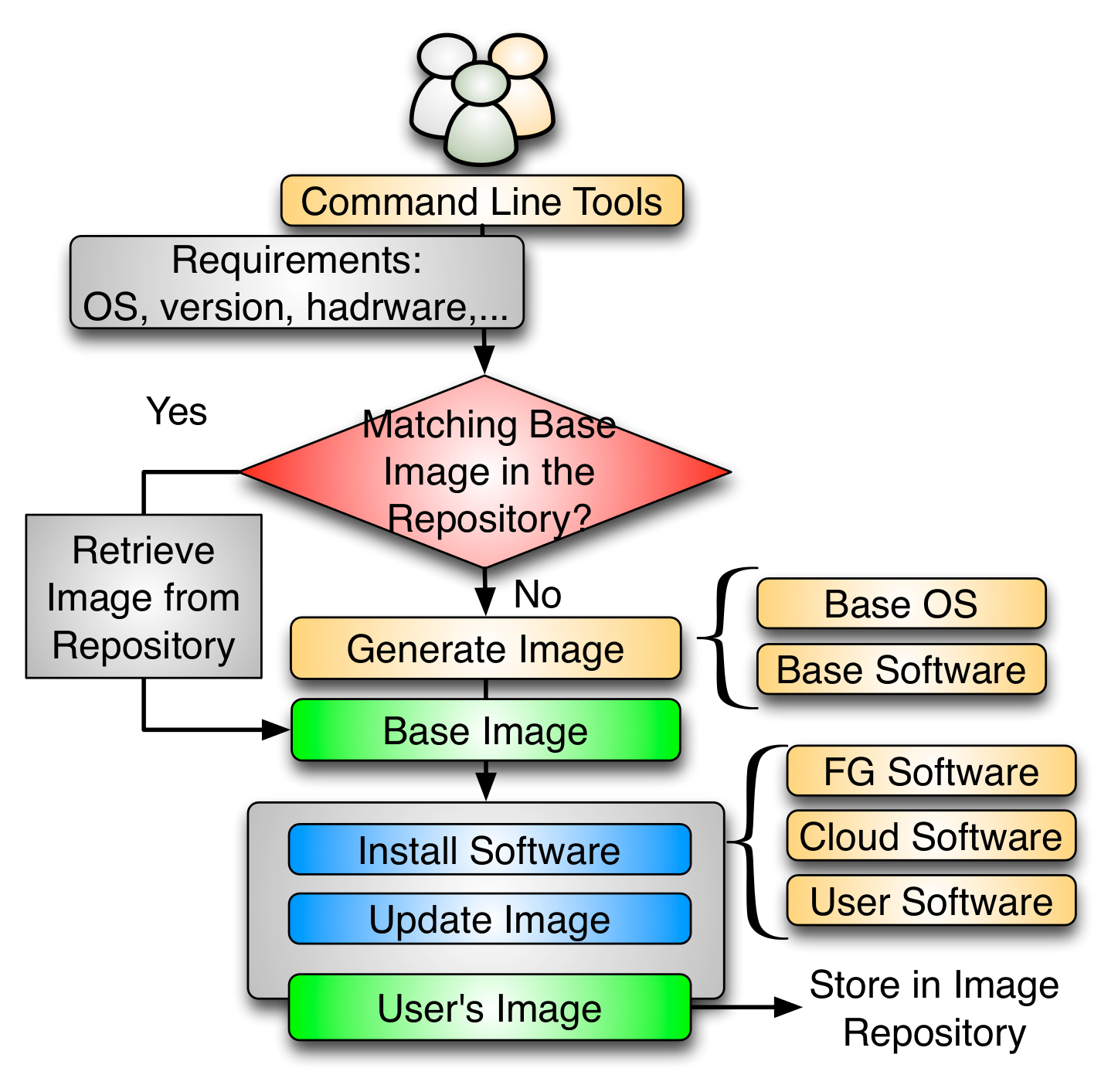

The image generation provides the first step in our image management process allowing users to create images according to their specifications. Since FG is a testbed that support different type of infrastructures like HPC or IaaS, the images created by this tool are not aimed to any specific environment. Thus, it is at the registration time when the images are customized to be successfully integrated into the desired infrastructure. This clear separation between image generation and registration provides a powerful model that allow us to independently increase the OS and infrastructures supported, respectively. Moreover, it reduces the amount of images that we need to manage in the image repository and therefore the disk usage.

The flowchart depicted in the next figure shows the process followed to create an image.

Users initiate the process by specifying their requirements. These requirements can include the selection of the OS type, version, architecture, software, services, and more. First, the image generation tool searches into the image repository to identify a base image to be cloned, and if there is no good candidate, the base image is created from scratch. Once we have a base image, the image generation tool installs the software required by the user. This software must be in the official OS repositories or in the FG software repository. The later contains software developed by the FG team or other approved software. The installation procedure can be aided by Chef, a configuration management tool to ensure the software is installed and configured properly. After updating the image, it is stored in the image repository and becomes available for registration into one of the supported infrastructures. Our tool is general to deal with installation particularities of different operating systems and architectures.

The image repository catalogs and stores images in a unified repository. It offers a common interface for distinguishing image types for different IaaS frameworks but also bare-metal images. This allows us to include a diverse set of images contributed not only by the FG development team but also by the user community that generates such images and wishes to share them. The images are augmented with information about the software stack installed on them including versions, libraries, and available services. This information is maintained in the catalog and can be searched by users and/or other FG services. Users looking for a specific image can discover available images fitting their needs using the catalog interface.

The information associated to the images is stored using the fields collected in the next table.

Field Name Type Valid Values Description Access imgId String Unique identifier Read-Only owner String Image’s owner Read-Only os String Operating system Read-Write arch String Architecture of the Image Read-Write description String Description of the image Read-Write tag String list Image’s keywords Read-Write vmType String none, xen, kvm, virtualbox, vmware Virtual machine type imgType String machine, kernel, eucalyptus, nimbus, opennebula, openstack Aim of the image Read-Write permission String public, private Access permission to the image Read-Write imgStatus String available, locked Status of the image Read-Write createdDate Date Upload date Read-Only lastAccess Date Last time the image was accessed Read-Only accessCount Long # times the image has been accessed Read-Only size Long Size of the image Read-Only

Once the image has been created and stored into the repository, we need to register it into the targeted infrastructure before we can instantiate it. Users requirements are simply the image, the targeted infrastructure and the kernel. The kernel is an optional requirement that allows advance users to select the most appropriate kernel for their experiments. This tool provides a list of available kernels organized by infrastructure. Nevertheless, users may request support for other kernels like one customized by them. Registering an image also includes the process of adapting it for the infrastructure. Often we find differences between them requiring us to provide further customizations, security check, the upload of the image to the infrastructure repository, and registering it. The process of adaptation and registration is depicted in Figure 4 in more detail. These customizations include the configuration of network IP, DNS, file system table, and kernel modules. Additional configuration is performed depending on the targeted deployed infrastructure.

In the HPC infrastructure the images are converted to network bootable images to be provisioned on bare-metal machines. Here, the customization process configures the image, so it can be integrated into the pool of deployable images accessible by the scheduler. In our case this is Moab. Hence, if such an image is specified as part of the job description the scheduler will conduct the provisioning of the image for us. These images are stateless and the system is restored by reverting to a default OS once the running job requiring a customized image is completed.

Images targeted for cloud infrastructures need to be converted into VM disks. These images also need some additional configuration to enable VM’s contextualization in the selected cloud. Our plan is to support the main IaaS clouds, namely Eucalyptus, Nimbus, OpenStack, OpenNebula, and Amazon Web Service (AWS). As our tool is extensible, we can also support other cloud frameworks.

At this moment, our software only provides command line interfaces. Thus, users need access to the machine where the client part of the software is installed. Currently, this is installed and configured in the India cluster (india.futuregrid.org). Since our software is going to interact with different cloud infrastructures, users need to have the appropriated credentials for each case.

Once users have the appropriate accounts, they can login on India and use the module functionality to load the environment variables:

$ ssh <username>@india.futuregrid.org $ module load futuregrid

Note

At this point, users have to explicitly request access to the Image Management and rain tools by sending a ticket to https://portal.futuregrid.org/help.

To ease the use of the FG tools, we have created a shell that provides a common interface for all these tools. So, users just need to remember how to execute the shell. Once users login into the shell, a number of features will be exposed to them. These features include help, command’s auto-completion, and list of available commands organized by tool. Moreover, users only need to type the password when they login into the shell.

Users can log into the shell by executing:

$ fg-shell -u <username>

Note

Users need to use their FutureGrid portal password.

More information about using the shell can be found in the FutureGrid Shell Manual.

The Image Repository is a service to query, store, and update images through a unique and common interface. Next, we show some examples of the Image Repository usage (fg-repo command). More details can be found in the Image Repository Manual.

Additionally, the Image Repository manages the user database for all the image management components. This database is used to authorize users, to control the user’s quotas and to record usage information. Therefore, this database complements the LDAP server which is mainly focused on the user authentication.

When using fg-shell, users need to load the Image Repository context by executing use repo inside the shell. The Image Repository environment is also included in the Image Management (image) and Rain (rain) contexts. Once there is an active context, the help command will show only the available commands for such context. Available contexts can be listed using the contexts command. More information about the shell can be found in the FutureGrid Shell Manual.

Upload an image

$ fg-repo -p /home/javi/image.iso "vmtype=kvm&os=Centos5&arch=i386&description=this is a test description&tag=tsttag1, tsttag2&permission=private" -u jdiaz $ fg-repo -p /home/javi/image.iso "ImgType=Openstack&os=Ubuntu&arch=x86_64&description=this is a test description" -u jdiaz

put /home/javi/image.iso ImgType=Openstack&os=Ubuntu&arch=x86_64&description=this is a test description

Note

The & character is used to separate different metadata fields.

Get an image

$ fg-repo -g 964160263274803087640112 -u jdiaz

get 964160263274803087640112

Modify the metadata of an image

$ fg-repo -m 964160263274803087640112 "ImgType=Opennebula&os=Ubuntu10" -u jdiaz

modify 964160263274803087640112 ImgType=Opennebula&os=Ubuntu10

Query Image Repository

$ fg-repo -q "* where vmType=kvm" -u jdiaz

list * where vmType=kvm

Add user to the Image Repository

$ fg-repo --useradd juan -u jdiaz $ fg-repo --usersetstatus juan active

user -a juan user -m juan status active

This component creates images, according to user requirements, that can be registered in FutureGrid. Since FG is a testbed that supports different type of infrastructures like HPC or IaaS frameworks, the images created by this tool are not aimed at any specific environment. Thus, it is at registration time when the images are customized to be successfully integrated into the desired infrastructure.

Next, we provide some examples of the Image Generation usage (fg-generate command). More details can be found in the Image Generation Manual.

When using fg-shell, users need to load the Image Management context by executing use image inside the shell. The Image Management environment is also included in the Rain (rain) contexts. Once there is an active context, the help command will show only the available commands for such context. Available contexts can be listed using the contexts command. More information about the shell can be found in the FutureGrid Shell Manual.

Generate a CentOS image

$ fg-generate -o centos -v 5 -a x86_64 -s wget,emacs,python26 -u jdiaz

generate -o centos -v 5 -a x86_64 -s wget,emacs,python26

Generate an Ubuntu image

$ fg-generate -o ubuntu -v 10.10 -a x86_64 -s wget,openmpi-bin -u jdiaz

generate -o ubuntu -v 10.10 -a x86_64 -s wget,emacs,python26

This tool is responsible for customizing images for specific infrastructures and registering them in such infrastructures. Currently, we fully support HPC (bare-metal machines), Eucalyptus, OpenStack, and Nimbus infrastructures. OpenNebula is also implemented but we do not have this infrastructure in production yet.

Next, we provide some examples of the image registration usage (fg-register command). A detailed manual can be found in the Image Registration Manual

When using fg-shell, users need to load the Image Management context by executing use image inside the shell. The Image Management environment also loads the Image Repository context. The Image Management is also included in the Rain (rain) contexts. Once there is an active context, the help command will show only the available commands for such context. Available contexts can be listed using the contexts command. More information about the shell can be found in the FutureGrid Shell Manual.

Note

Register an image for the HPC Infrastructure India

$ fg-register -r 964160263274803087640112 -x india -u jdiaz

register -r 964160263274803087640112 -x india

Register an image for OpenStack (if you followed the FG Openstack tutorial, your novarc will probably be in ~/openstack/novarc)

$ fg-register -r 964160263274803087640112 -s india -v ~/novarc -u jdiaz

register -r 964160263274803087640112 -s india -v ~/novarc

Customize an image for Ecualyptus but do not register it (here -v ~/eucarc is not needed because we are not going to register the image in the infrastructure)

$ fg-register -r 964160263274803087640112 -e india -g -u jdiaz

register -r 964160263274803087640112 -e india -g

Register an image for Nimbus

$ fg-register -r 964160263274803087640112 -n hotel -v ~/hotel.conf -u jdiaz

register -r 964160263274803087640112 -n hotel -v ~/hotel.conf

List available kernels for the HPC infrastructure India

fg-register --listkernels -x india -u jdiaz

hpclistkernels india

List available kernels for OpenStack

fg-register --listkernels -s india -u jdiaz

cloudlistkernels -s india

Deregister an image from OpenStack (if you followed the FG Openstack tutorial, your novarc will probably be in ~/openstack/novarc)

fg-register --deregister ami-00000126 -s india -v ~/novarc -u jdiaz

deregister --deregister ami-00000126 -s india -v ~/novarc

Deregister an image from HPC (user role must be admin)

fg-register --deregister centosjdiaz1610805121 -x india -u jdiaz

deregister --deregister centosjdiaz1610805121 -x india

List Information of the available sites

fg-register --listsites -u jdiaz

listsites

The output would be something like

Supported Sites Information =========================== Cloud Information ----------------- SiteName: sierra Description: In this site we support Eucalyptus 3. Infrastructures supported: ['Eucalyptus'] SiteName: hotel Description: In this site we support Nimbus 2.9. Infrastructures supported: ['Nimbus'] SiteName: india Description: In this site we support Eucalyptus 2 and OpenStack Cactus. OpenNebula is not deployed in production but you can adapt images for it too. Infrastructures supported: ['Eucalyptus', 'OpenStack', 'OpenNebula'] HPC Information (baremetal) --------------------------- SiteName: india RegisterXcat Service Status: Active RegisterMoab Service Status: Active

This component allow users to dynamically register FutureGrid software environments as requirement of a job submission. This component will make use of the previous registration tool. Currently we only support HPC job submissions.

Next, we provide some examples of the Rain usage (fg-rain command). A detailed manual can be found in the Rain Manual.

When using fg-shell, users need to load the Image Management context by executing use rain inside the shell. The Rain environment also loads the Image Repository and Image Management contexts. Once there is an active context, the help command will show only the available commands for such context. Available contexts can be listed using the contexts command. More information about the shell can be found in the FutureGrid Shell Manual.

Note

Run a job in four nodes on India using an image stored in the Image Repository (This involves the registration of the image in the HPC infrastructure)

$ fg-rain -r 1231232141 -x india -m 4 -j myscript.sh -u jdiaz

use rain #if your prompt is different to fg-rain> fg-rain> launch -r 1231232141 -x india -m 4 -j myscript.sh

Run a job in two nodes on India using an image already registered in the HPC Infrastructure India

$ fg-rain -i centosjavi434512 -x india -m 2 -j myscript.sh -u jdiaz

use rain #if your prompt is different to fg-rain> fg-rain> launch -i centosjavi434512 -x india -m 2 -j myscript.sh

Interactive mode. Instantiate two VMs using an image already registered on OpenStack

$ fg-rain -i ami-00000126 -s india -v ~/novarc -m 2 -I -u jdiaz

use rain #if your prompt is different to fg-rain> fg-rain> launch -i ami-00000126 -s india -v ~/novarc -m 2 -I

Run an MPI job in six VM using an image already registered on Eucalyptus (the image has to have the mpich2 package installed)

Content of mpichjob.sh:

#!/bin/bash #real home is /tmp/jdiaz/ #VM home is /N/u/jdiaz/ #$HOME/machines is a file with the VMs involved in this job cd /tmp/N/u/jdiaz/mpichexample/ mpiexec.hydra -machinefile /N/u/jdiaz/machines -np `wc -l /N/u/jdiaz/machines | cut -d" " -f1` /tmp/N/u/jdiaz/example/a.out > /tmp/N/u/jdiaz/output.mpichexample

$ fg-rain -i ami-00000126 -e india -v ~/eucarc -j mpichjob.sh -m 6 -u jdiaz

use rain #if your prompt is different to fg-rain> fg-rain> launch -i ami-00000126 -e india -v ~/eucarc -j mpichjob.sh -m 6

Run Hadoop job on three VMs using an image already registered on OpenStack (the image has to have java package installed. Hadoop is automatically installed/configured by the tool.)

- For this example, the inputdir1 directory contains ebooks from the Project Gutenberg downloaded in Plain Text UTF-8 encoding:

Content of hadoopword.sh:

hadoop jar $HADOOP_CONF_DIR/../hadoop-examples*.jar wordcount inputdir1 outputdir

- Using the CLI

$ fg-rain -i ami-000001bf -s india -v ~/novarc -j ~/hadoopword.sh -m 3 --inputdir ~/inputdir1/ --outputdir ~/outputdir -u jdiaz

use rain #if your prompt is different to fg-rain> fg-rain> launchadoop -i ami-000001bf -s india -v ~/novarc -j ~/hadoopword.sh -m 3 --inputdir ~/inputdir1/ --outputdir ~/outputdir

Interactive mode. Setup a Hadoop cluster in three VMs using an image already registered on OpenStack (the image has to have java package installed. Hadoop is automatically installed/configured by the tool.)

- For this example, the inputdir1 directory contains ebooks from the Project Gutenberg downloaded in Plain Text UTF-8 encoding:

Content of hadoopword.sh:

hadoop jar $HADOOP_CONF_DIR/../hadoop-examples*.jar wordcount inputdir1 outputdir

- Using the CLI

$ fg-rain -i ami-000001bf -s india -v ~/novarc -I -m 3 --inputdir ~/inputdir1/ --outputdir ~/outputdir -u jdiaz

use rain #if your prompt is different to fg-rain> fg-rain> launchadoop -i ami-000001bf -s india -v ~/novarc -I -m 3 --inputdir ~/inputdir1/ --outputdir ~/outputdir

Run Hadoop job three machines using an image already registered on the HPC infrastructure (the image has to have java package installed. Hadoop is automatically installed/configured by the tool.)

- For this example, the inputdir1 directory contains ebooks from the Project Gutenberg downloaded in Plain Text UTF-8 encoding:

Content of hadoopword.sh:

hadoop jar $HADOOP_CONF_DIR/../hadoop-examples*.jar wordcount inputdir1 outputdir

- Using the CLI

$ fg-rain -x india -j ~/hadoopword.sh -m 3 --inputdir ~/inputdir1/ --outputdir ~/outputdir --walltime 1 -u jdiaz

use rain #if your prompt is different to fg-rain> fg-rain> launchadoop -x india -j ~/hadoopword.sh -m 3 --inputdir ~/inputdir1/ --outputdir ~/outputdir --walltime 1

In this section you will find information on how to use the command line interfaces of our tools.

The Image Repository is a service to query, store, and update images through a unique and common interface.

usage: fg-repo [-h] -u user [-d]

(-q [AttributeString] | -g imgId | -p imgFile [AttributeString ...] | -m imgId AttributeString

| -r imgId [imgId ...] | -s imgId permissionString | --useradd userId | --userdel userId

| --userlist | --setuserquota userId quotaExpresion | --setuserrole userId role | --setuserstatus userId status

| --histimg [imgId] | --histuser [userId])

[--nopasswd]

Options between brackets are not required. Parenthesis means that you need to specify one of the options.

| Option | Description |

| -h/--help | Shows help information and exit. |

| -u/--user <userName> | FutureGrid HPC user name, that is, the one used to login into the FG resources. |

| -q/--list [queryString] | Get list of images that meet the criteria. |

| -g/--get <imgId> | Get an image by specifying its unique identifier. |

| -p/--put <imgFile> [attributeString] | Store image into the repository and its metadata defined in attributeString. Default metadata is provided if the argument is missing. |

| -m/--modify <imgId> <attributeString> | Modify the metadata associated with the image. |

| -r/--remove <imgId> [imgId ...] | Delete images from the Repository. |

| -s/--setpermission <imgId> <permissionString> | Change the permission of a particular image. Valid values are public, private. |

| --histimg [imgId] | Get usage information an image. If no argument provided, it shows the usage information of all images. |

| --nopasswd | If this option is used, the password is not requested. This is intended for systems daemons like Inca. |

The following options are available only for users with admin role.

| Option | Description |

| --useradd <userId> | Add a new user to the image management database. |

| --userdel <userId> | Delete an user from the image management database. |

| --userlist | List of users. |

| --setuserquota <userId> <quota> | Modify the quota of a user. The quota is given in bytes, but math expressions are allowed (4*1024*1024). By default each user has 4GB of disk space. |

| --setuserrole <userId> <role> | Modify the role of a user. Valid values: admin and user roles. |

| --setuserstatus <userId> <status> | Modify the status of a user. Valid values: pending, active, and inactive. |

| --histuser [userId] | Get usage info of an User. If no argument provided, it shows the usage information of all users. This option can be used by normal users to show their own information |

Note

While using the command line interface, the attributeString, queryString and quotaExpression arguments must be enclosed by “ characters.

Upload an image

$ fg-repo -p /home/javi/image.iso "vmtype=kvm&os=Centos5&arch=i386&description=this is a test description&tag=tsttag1, tsttag2&permission=private" -u jdiaz $ fg-repo -p /home/javi/image.iso "ImgType=Openstack&os=Ubuntu&arch=x86_64&description=this is a test description" -u jdiaz

Note

The & character is used to separate different metadata fields.

Get an image

$ fg-repo -g 964160263274803087640112 -u jdiaz

Modify the metadata of an image

$ fg-repo -m 964160263274803087640112 "ImgType=Opennebula&os=Ubuntu10" -u jdiaz

Query Image Repository

$ fg-repo -q "* where vmType=kvm" -u jdiaz

Add user to the Image Repository

$ fg-repo --useradd juan -u jdiaz $ fg-repo --usersetstatus juan active

This service generates images with the requested OS and software stacks specification.

usage: fg-generate [-h] -u USER [-d] -o OSName [-v OSversion] [-a arch]

[--baseimage | -s software] [--scratch] [-n givenname]

[-e description] [-g] [-z SIZE] [--nopasswd]

Options between brackets are not required. Parenthesis means that you need to specify one of the options.

| Option | Description |

| -h/--help | Shows help information and exit. |

| -u/--user <userName> | FutureGrid HPC user name, that is, the one used to login into the FG resources. |

| -o/--os <osName> | Specify the desired Operating System for the new image. Currently, CentOS and Ubuntu are supported |

| -v/--version <osVersion> | Operating System version. In the case of Centos, it can be 5 or 6. In the case of Ubuntu, it can be karmic(9.10), lucid(10.04), maverick(10.10), natty (11.04) |

| -a/--arch <arch> | Destination hardware architecture (x86_64 or i386) |

| --baseimage | Generate a Base Image that will be used to generate other images. In this way, the image generation process will be faster. |

| -s/--software <software> | List of software packages, separated by commas, that will be installed in the image. |

| --scratch | Generate the image from scratch without using any Base Image from the repository. |

| -n/--name <givenname> | Desired recognizable name of the image. |

| -e/--description <description> | Short description of the image and its purpose. |

| -g/--getimg | Retrieve the image instead of uploading to the image repository. |

| -z/--size <SIZE> | Specify the size of the Image in GigaBytes. The size must be large enough to install all the software required. The default and minimum size is 1.5GB, which is enough for most cases. |

| --nopasswd | If this option is used, the password is not requested. This is intended for systems daemons like Inca. |

Generate a CentOS image

$ fg-generate -o centos -v 5 -a x86_64 -s wget,emacs,python26 -u jdiaz

Generate an Ubuntu image

$ fg-generate -o ubuntu -v 10.10 -a x86_64 -s wget,openmpi-bin -u jdiaz

This service registers images in the selected infrastructures. After this process, images become available for instantiation in such infrastructures.

usage: fg-register [-h] -u user [-d] (-i ImgFile | -r ImgId | --list | --listkernels | --listsites | --deregister ImgId)

[-k Kernel version] [-a ramdiskId]

(-x SiteName | -e SiteName | -o SiteName | -n SiteName | -s SiteName)

[-v VARFILE] [--getimg] [--noldap] [--wait] [--nopasswd] [--justregister]

Options between brackets are not required. Parenthesis means that you need to specify one of the options.

| Option | Description |

| -h/--help | Shows help information and exit. |

| -u/--user <userName> | FutureGrid HPC user name, that is, the one used to login into the FG resources. |

| -i/--image <imgFile> | Select the image to register by specifying its location. The image is a tgz file that contains the manifest and image files. |

| -r/--imgid <imgId> | Select the image to register by specifying its Id in the repository. |

| -k/--kernel <version> | Specify the desired kernel. Case a) if the image has to be adapted (any image generated with fg-generate) this option can be used to select one of the available kernels. Both kernelId and ramdiskId will be selected according to the selected kernel. This case is for any infrastructure. Case b) if the image is ready to be registered, you may need to specify the id of the kernel in the infrastructure. This case is when -j/--justregister is used and only for cloud infrastructures. |

| -a/--ramdisk <ramdiskId> | Specify the desired ramdisk that will be associated to your image in the cloud infrastructure. This option is only needed if -j/--justregister is used. |

| -l/--list | List images registered in the HPC or Cloud infrastructures. |

| -t/--listkernels | List kernels available for HPC or Cloud infrastructures. |

| --listsites | List supported sites with their respective HPC and Cloud services. |

| --deregister <imgId> | Deregister an image from the specified infrastructure. |

| -x/--xcat <SiteName> | Select the HPC infrastructure named SiteName (minicluster, india ...). |

| -e/--euca <SiteName> | Select the Eucalyptus Infrastructure located in SiteName (india, sierra...). |

| -s/--openstack <SiteName> | Select the OpenStack Infrastructure located in SiteName (india, sierra...). |

| -n/--nimbus <SiteName> | Select the Nimbus Infrastructure located in SiteName (india, sierra...). |

| -o/--opennebula <SiteName> | Select the OpenNebula Infrastructure located in SiteName (india, sierra...). |

| -v/--varfile <VARFILE> | Path of the environment variable files. Currently this is used by Eucalyptus, OpenStack and Nimbus. |

| -g/--getimg | Customize the image for a particular cloud framework but does not register it. So the user gets the image file. |

| -p/--noldap | If this option is active, FutureGrid LDAP will not be configured in the image. This option only works for Cloud registrations. LDAP configuration is needed to run jobs using fg-rain |

| -w/--wait | Wait until the image is available in the targeted infrastructure. Currently this is used by Eucalyptus and OpenStack. |

| --nopasswd | If this option is used, the password is not requested. This is intended for systems daemons like Inca. |

| -j, --justregister | It assumes that the image is ready to run in the selected infrastructure. Thus, no additional configuration will be performed. Only valid for Cloud infrastructures. (This is basically a wrapper of the tools that register images into the cloud infrastructures) |

Note

Register an image for the HPC Infrastructure India

$ fg-register -r 964160263274803087640112 -x india -u jdiaz

Register an image for OpenStack

$ fg-register -r 964160263274803087640112 -s india -v ~/novarc -u jdiaz

Customize an image for Ecualyptus but do not register it (here -v ~/eucarc is not needed because we are not going to register the image in the infrastructure)

$ fg-register -r 964160263274803087640112 -e sierra -g -u jdiaz

Register an image for Nimbus

$ fg-register -r 964160263274803087640112 -n hotel -v ~/hotel.conf -u jdiaz

List available kernels for the HPC infrastructure India

fg-register --listkernels -x india -u jdiaz

List available kernels for OpenStack

fg-register --listkernels -s india -u jdiaz

Deregister an image from OpenStack

fg-register --deregister ami-00000126 -s india -v ~/novarc -u jdiaz

Deregister an image from HPC (user role must be admin)

fg-register --deregister centosjdiaz1610805121 -x india -u jdiaz

List Information of the available sites

fg-register --listsites -u jdiaz

The output would be something like

Supported Sites Information =========================== Cloud Information ----------------- SiteName: sierra Description: In this site we support Eucalyptus 3. Infrastructures supported: ['Eucalyptus'] SiteName: hotel Description: In this site we support Nimbus 2.9. Infrastructures supported: ['Nimbus'] SiteName: india Description: In this site we support Eucalyptus 2 and OpenStack Cactus. OpenNebula is not deployed in production but you can adapt images for it too. Infrastructures supported: ['Eucalyptus', 'OpenStack', 'OpenNebula'] HPC Information (baremetal) --------------------------- SiteName: india RegisterXcat Service Status: Active RegisterMoab Service Status: Active

Rain is a service that a command to dynamically deploy a FutureGrid software environments and stacks.

usage: fg-rain [-h] -u user [-d] [-k Kernel version]

(-i ImgId | -r ImgId) (-x MachineName | -e [Address:port] | -s [Address])

[-v VARFILE] [-t <instancetype>] [--volume <size>] [-m #instances] [-w hours]

(-j JOBSCRIPT | -I | -b) [--nopasswd]

[--hadoop] [--inputdir INPUTDIR] [--outputdir OUTPUTDIR] [--hdfsdir HDFSDIR]

Options between brackets are not required. Parenthesis means that you need to specify one of the options.

| Option | Description | |

| -h/--help | Shows help information and exit. | |

| -u/--user <userName> | FutureGrid HPC user name, that is, the one used to login into the FG resources. | |

| -k/--kernel <version> | Specify the desired kernel (fg-register can list the available kernels for each infrastructure). | |

| -i/--registeredimageid <imgId> | Select the image to use by specifying its Id in the target infrastructure. This assumes that the image is registered in the selected infrastructure. | |

| -r/--imgid <imgId> | Select the image to use by specifying its Id in the repository. The image will be automatically registered in the infrastructure before the job is executed. | |

| -x/--xcat <MachineName> | Use the HPC infrastructure named MachineName (minicluster, india ...). | |

| -e/--euca [Address:port] | Use the Eucalyptus Infrastructure, which is specified in the argument. The argument should not be needed. | |

| -s/--openstack [Address] | Use the OpenStack Infrastructure, which is specified in the argument. The argument should not be needed. | |

| -n/--nimbus [Address] | (NOT yet supported) Use the Nimbus Infrastructure, which is specified in the argument. The argument should not be needed. | |

| -o/--opennebula [Address] | (NOT yet supported) Use the OpenStack Infrastructure, which is specified in the argument. The argument should not be needed. | |

| -v/--varfile <VARFILE> | Path of the environment variable files. Currently this is used by Eucalyptus, OpenStack and Nimbus. | |

| -m/--numberofmachines <#instances> | Number of machines needed. | |

| --volume <size> | This creates and attaches a volume of the specified size (in GiB) to each instance. The volume will be mounted in /mnt/. This is supported by Eucalyptus and OpenStack. | |

| -t/--instance-type <instancetype> | VM Image type to run the instance as. Valid values: [‘m1.small’, ‘m1.large’, ‘m1.xlarge’] | |

| -w/--walltime <hours> | How long to run (in hours). You may use decimals. This is used for HPC and Nimbus. | |

| -j/--jobscript <JOBSCRIPT> | Script to execute on the provisioned images. In the case of Cloud environments, the user home directory is mounted in /tmp/N/u/<username>. The /N/u/<username> is only used for ssh between VM and store the ips of the parallel job in a file called /N/u/<username>/machines | |

| -I/--interactive | Interactive mode. It boots VMs or provisions bare-metal machines. Then, the user is automatically logged into one of the VMs/machines. | |

| -b, --background | Background mode. It boots VMs or provisions bare-metal machines. Then, it gives you the information you need to know to log in anytime. | |

| --nopasswd | If this option is used, the password is not requested. This is intended for systems daemons like Inca. | |

| Hadoop Option | Additional options to run hadoop jobs or go interactive into a hadoop cluster | |

| --hadoop | Specify that your want to execute a Hadoop job. Rain will setup a hadoop cluster in the selected infrastructure. It assumes that Java is installed in the image/machine. | |

| --inputdir <inputdir> | Location of the directory containing the job input data that has to be copied to HDFS. The HDFS directory will have the same name. Thus, if this option is used, the job script has to specify the name of the directory (not to all the path). | |

| --outputdir <outputdir> | Location of the directory to store the job output data from HDFS. The HDFS directory will have the same name. Thus, if this option is used, the job script has to specify the name of the directory (not to all the path). | |

| --hdfsdir <hdfsdir> | Location of the HDFS directory to use in the machines. If not provided /tmp/ will be used. |

Run a job in four nodes using an image stored in the Image Repository (This involves the registration of the image on xCAT/Moab)

$ fg-rain -r 1231232141 -x india -m 4 -j myscript.sh -u jdiaz

Run a job in four nodes using an image already registered on the HPC infrastructure (xCAT/Moab)

$ fg-rain -i centosjavi434512 -x india -m 2 -j myscript.sh -u jdiaz

Interactive mode. Instantiate two VMs using an image already registered on OpenStack

$ fg-rain -i ami-00000126 -s -v ~/novarc -m 2 -I -u jdiaz

Run a job in a VM using an image already registered on Eucalyptus

$ fg-rain -i ami-00000126 -e -v ~/eucarc -j myscript.sh -u jdiaz

Run an MPI job in six VM using an image already registered on Eucalyptus (the image has to have the mpich2 package installed)

$ fg-rain -i ami-00000126 -e -v ~/eucarc -j mpichjob.sh -m 6 -u jdiaz

Content of mpichjob.sh:

#!/bin/bash #real home is /tmp/jdiaz/ #VM home is /N/u/jdiaz/ #$HOME/machines is a file with the VMs involved in this job cd /tmp/N/u/jdiaz/mpichexample/ mpiexec.hydra -machinefile /N/u/jdiaz/machines -np `wc -l /N/u/jdiaz/machines | cut -d" " -f1` /tmp/N/u/jdiaz/example/a.out > /tmp/N/u/jdiaz/output.mpichexample

Run Hadoop job on three VMs using an image already registered on OpenStack (the image has to have java package installed. Hadoop is automatically installed/configured by the tool.)

$ fg-rain -i ami-000001bf -s -v ~/novarc -j ~/hadoopword.sh -m 3 --inputdir ~/inputdir1/ --outputdir ~/outputdir -u jdiaz

- For this example, the inputdir1 directory contains ebooks from the Project Gutenberg downloaded in Plain Text UTF-8 encoding:

Content of hadoopword.sh:

hadoop jar $HADOOP_CONF_DIR/../hadoop-examples*.jar wordcount inputdir1 outputdir

Interactive mode. Setup a Hadoop cluster in three VMs using an image already registered on OpenStack (the image has to have java package installed. Hadoop is automatically installed/configured by the tool.)

$ fg-rain -i ami-000001bf -s -v ~/novarc -I -m 3 --inputdir ~/inputdir1/ --outputdir ~/outputdir -u jdiaz

- For this example, the inputdir1 directory contains ebooks from the Project Gutenberg downloaded in Plain Text UTF-8 encoding:

Content of hadoopword.sh:

hadoop jar $HADOOP_CONF_DIR/../hadoop-examples*.jar wordcount inputdir1 outputdir

Run Hadoop job three machines using an image already registered on the HPC infrastructure (the image has to have java package installed. Hadoop is automatically installed/configured by the tool.)

$ fg-rain -x india -j ~/hadoopword.sh -m 3 --inputdir ~/inputdir1/ --outputdir ~/outputdir --walltime 1 -u jdiaz

- For this example, the inputdir1 directory contains ebooks from the Project Gutenberg downloaded in Plain Text UTF-8 encoding:

Content of hadoopword.sh:

hadoop jar $HADOOP_CONF_DIR/../hadoop-examples*.jar wordcount inputdir1 outputdir

The FutureGrid shell simplifies the access to the different FutureGrid software components. This shell is a common entry point for every components that offer a customized environment where only the FutureGrid commands are available. It has features similar to the regular GNU/Linux shell like command auto-completion.

usage: fg-shell [-h] -u user [-q] [-i] [-f script_file] [--nopasswd]

Options between brackets are not required. Parenthesis means that you need to specify one of the options.

| Option | Description |

| -h/--help | Shows help information and exit. |

| -u/--user <userName> | FutureGrid HPC user name, that is, the one used to login into the FG resources. |

| -q/--quiet | Prevent to load banner and welcome messages |

| -i/--interactive | After the commands are interpreted the shell is put into interactive mode |

| -f/--file <script_file> | Execute commands from a file. |

| --nopasswd | If this option is used, the password is not requested. This is intended for systems daemons like Inca. |

The shell is executed by typing:

fg-shell -u <username>

After executing the previous command and typing your password, the prompt should change to fg>.

Note

Using shell from outside FutureGrid: If the shell is installed outside of FutureGrid, users will not be able to enter in the shell using their passwords. The security reasons, the LDAP server cannot be contacted from outside of FutureGrid. Therefore, users will have to use the option --nopasswd and set their password inside the shell by executing the command setpasswd. In this way, users can authenticate against the different FutureGrid components without typing the password everytime.

This is useful when a user install the shell in his local machine because he will not be able to enter in the shell typing his password.

Note

Context concept: It is essential to understand how to use the shell the concept CONTEXT. A context is an environment specialized for a particular tool or service. This allows us to use only the components we are interested on and organize the commands by component. For example, if we want to use the image repository, we initialize its context by typing use repo and only the image repository commands will be available. See contexts and use commands.

Next, we explain the available commands that you can find inside the FutureGrid Shell.

Commands listed in this section are available in any context.

Help Related Commands

Command

Description

help [command]

Show help message for a command or List of all available commands organized by context if we are in the generic context fg> (see use and context commands). However, if we are in a specific context (e.g. fg-repo>) it only show the generic commands and the specific commands of this particular context.

manual

List help message for all commands in the shell.

shortcuts

List all available shortcuts.

CONTEXT Related Commands

Command

Description

contexts

List of available contexts.

use [context]

Change the Shell CONTEXT to use a specified FG component. If no argument is provided, it returns to the default context.

History Related Commands

Command

Description

history, hist and hi

Show historic of executed commands.

historysession, hists and his

Show historic of the commands executed in the current session.

l, li

List last executed command.

save [N]

Save session history to a file. N => number of command (from historysession), or *. Most recent command if omitted.

Execution Related Commands

Command

Description

load <filename> and exec <filename>

Load commands from an script and stay in the shell

pause [text]

Displays the specified text then waits for the user to press RETURN.

py [command]

py <command>: Executes a Python command. py: Enters interactive Python mode. End with Ctrl-D (Unix) / Ctrl-Z (Windows), quit(), exit().

run, r

Re-run the last executed command

script [filename]

When Script is active, all commands executed are stored in a file. Activate it by executing: script [file]. If no argument is provided, the file will be called script and will be located in your current directory. To finish and store the commands execute: script end

User-Settable Parameters Related Commands

Command

Description

setpassword

Set the password for the current session without leaving the shell. The password is stored encrypted

set [parameter] [value]

Sets a cmd2 parameter. Call without arguments for a list of settable parameters with their values.

show

List of settable parameters with their values.

These commands are available when Image Repository (repo) or Image Management (image) contexts are active. To activate the image repository context execute use repo. If we execute help, we will see which commands are generic and which ones are specific of this context.

Image Related Commands

Command

Description

list [queryString]

Get list of images that meet the criteria.

get <imgId>

Get an image by specifying its unique identifier.

put <imgFile> [attributeString]

Store image into the repository and its metadata defined in attributeString. Default metadata is provided if the argument is missing.

modify <imgId> <attributeString>

Modify the metadata associated with the image.

remove <imgId>

Delete images from the Repository.

setpermission <imgId> <permissionString>

Change the permission of a particular image. Valid values are public, private.

histimg [imgId]

Get usage information an image. If no argument provided, it shows the usage information of all images.

User Related Commands

The following options are available only for users with admin role.

Command

Description

user <options>

Manage image management user’s database.

options

-a/--add <userId> Add a new user to the image management database.

-d/--del <userId> Delete an user from the image management database.

-l, --list List of users.

-m/--modify <userId> <quota/role/status> <value> Modify quota, role or status of an user.

histuser [userId]

Get usage info of an User. If no argument provided, it shows the usage information of all users. This option can be used by normal users to show their own information

These commands are available when the Image Management (image) or the Rain (rain) contexts are active. To activate the image management context execute use image. If we execute help, we will see which commands are generic and which ones are specific of this context.

| Command | Description |

| generate <options> | Generates images with the requested OS and software stacks specification. options -o/--os <osName> Specify the desired Operating System for the new image. Currently, CentOS and Ubuntu are supported -v/--version <osVersion> Operating System version. In the case of Centos, it can be 5 or 6. In the case of Ubuntu, it can be karmic(9.10), lucid(10.04), maverick(10.10), natty(11.04) -a/--arch <arch> Destination hardware architecture (x86_64 or i386) --baseimage Generate a Base Image that will be used to generate other images. In this way, the image generation process will be faster. -s/--software <software> List of software packages, separated by commas, that will be installed in the image. --scratch Generate the image from scratch without using any Base Image from the repository. -n/--name <givenname> Desired recognizable name of the image. -e/--description <description> Short description of the image and its purpose. -g/--getimg Retrieve the image instead of uploading to the image repository. -z/--size <SIZE> Specify the size of the Image in GigaBytes. The size must be large enough to install all the software required. The default and minimum size is 1.5GB, which is enough for most cases. |

These commands are available when the Image Management (image) or the Rain (rain) contexts are active. To activate the image management context execute use image. If we execute help, we will see which commands are generic and which ones are specific of this context.

| Command | Description |

| register <options> | Registers images in the selected infrastructures. After this process, images become available for instantiation in such infrastructures. Options -k/--kernel <version> Specify the desired kernel. -i/--image <imgFile> Select the image to register by specifying its location. The image is a tgz file that contains the manifest and image files. -r/--imgid <imgId> Select the image to register by specifying its Id in the repository. -x/--xcat <SiteName> Select the HPC infrastructure named SiteName (minicluster, india ...). -e/--euca <SiteName> Select the Eucalyptus Infrastructure located in SiteName (india, sierra...). -s/--openstack <SiteName> Select the OpenStack Infrastructure located in SiteName (india, sierra...). -n/--nimbus <SiteName> Select the Nimbus Infrastructure located in SiteName (india, sierra...). -o/--opennebula <SiteName> Select the OpenNebula Infrastructure located in SiteName (india, sierra...). -v/--varfile <VARFILE> Path of the environment variable files. Currently this is used by Eucalyptus, OpenStack and Nimbus. -g/--getimg Customize the image for a particular cloud framework but does not register it. So the user gets the image file. -p/--noldap If this option is active, FutureGrid LDAP will not be configured in the image. This option only works for Cloud registrations. LDAP configuration is needed to run jobs using fg-rain -w/--wait Wait until the image is available in the targeted infrastructure. Currently this is used by Eucalyptus and OpenStack. |

| cloudlist <options> | List images registered in the Cloud infrastructures. Options -e/--euca <SiteName> Select the Eucalyptus Infrastructure located in SiteName (india, sierra...). -n / --nimbus <SiteName> Select the Nimbus Infrastructure located in SiteName (india, sierra...). -o / --opennebula <SiteName> Select the OpenNebula Infrastructure located in SiteName (india, sierra...). -s / --openstack <SiteName> Select the OpenStack Infrastructure located in SiteName (india, sierra...). -v/--varfile <VARFILE> Path of the environment variable files. Currently this is used by Eucalyptus, OpenStack and Nimbus. |

| cloudlistkernels <options> | List kernels available for the Cloud infrastructures. Options -e/--euca <SiteName> Select the Eucalyptus Infrastructure located in SiteName (india, sierra...). -n / --nimbus <SiteName> Select the Nimbus Infrastructure located in SiteName (india, sierra...). -o / --opennebula <SiteName> Select the OpenNebula Infrastructure located in SiteName (india, sierra...). -s / --openstack <SiteName> Select the OpenStack Infrastructure located in SiteName (india, sierra...). |

| hpclist <SiteName> | List images registered in the HPC infrastructure named SiteName (minicluster, india ...). |

| hpclistkernels <SiteName> | List kernels available for HPC infrastructure named SiteName (minicluster, india ...). |

| listsites | List supported sites with their respective HPC and Cloud services. |

| deregister <options> | Deregister an image from the specified infrastructure. Options --deregister <imageId> -x/--xcat <SiteName> Select the HPC infrastructure named SiteName (minicluster, india ...). -e/--euca <SiteName> Select the Eucalyptus Infrastructure located in SiteName (india, sierra...). -n / --nimbus <SiteName> Select the Nimbus Infrastructure located in SiteName (india, sierra...). -o / --opennebula <SiteName> Select the OpenNebula Infrastructure located in SiteName (india, sierra...). -s / --openstack <SiteName> Select the OpenStack Infrastructure located in SiteName (india, sierra...). -v/--varfile <VARFILE> Path of the environment variable files. Currently this is used by Eucalyptus, OpenStack and Nimbus. |

These commands are available when the Rain (rain) contexts is active. To activate the rain context execute use rain. If we execute help, we will see which commands are generic and which ones are specific of this context.

| Command | Description | |

| launch <options> | Provision machines or VMs with the requested OS and execute a job or enter in interactive mode Options -k/--kernel <version> Specify the desired kernel. -i/--image <imgFile> Select the image to register by specifying its location. The image is a tgz file that contains the manifest and image files. -r/--imgid <imgId> Select the image to register by specifying its Id in the repository. -x/--xcat <SiteName> Select the HPC infrastructure named SiteName (minicluster, india ...). -e/--euca <SiteName> Select the Eucalyptus Infrastructure located in SiteName (india, sierra...). -s/--openstack <SiteName> Select the OpenStack Infrastructure located in SiteName (india, sierra...). -n/--nimbus <SiteName> Select the Nimbus Infrastructure located in SiteName (india, sierra...). -o/--opennebula <SiteName> Select the OpenNebula Infrastructure located in SiteName (india, sierra...). -v/--varfile <VARFILE> Path of the environment variable files. Currently this is used by Eucalyptus, OpenStack and Nimbus. -m/--numberofmachines <#instances> Number of machines needed. --volume <size> This creates and attaches a volume of the specified size (in GiB) to each instance. The volume will be mounted in /mnt/. This is supported by Eucalyptus and OpenStack. -t/--instance-type <instancetype> VM Image type to run the instance as. Valid values: [‘m1.small’, ‘m1.large’, ‘m1.xlarge’] -w/--walltime <hours> How long to run (in hours). You may use decimals. This is used for HPC and Nimbus. -j/--jobscript <JOBSCRIPT> Script to execute on the provisioned images. In the case of Cloud environments, the user home directory is mounted in /tmp/N/u/<username>. The /N/u/<username> is only used for ssh between VM and store the ips of the parallel job in a file called /N/u/<username>/machines -I/--interactive Interactive mode. It boots VMs or provisions bare-metal machines. Then, the user is automatically logged into one of the VMs/machines. -b, --background Background mode. It boots VMs or provisions bare-metal machines. Then, it gives you the information you need to know to log in anytime. -w/--wait Wait until the image is available in the targeted infrastructure. Currently this is used by Eucalyptus and OpenStack. |

|

| launchhadoop <options> | Provision machines or VMs with the requested OS, install/configure Hadoop and execute a job or enter in interactive mode. Options Same options that launch. Additional Hadoop Option --inputdir <inputdir> Location of the directory containing the job input data that has to be copied to HDFS. The HDFS directory will have the same name. Thus, if this option is used, the job script has to specify the name of the directory (not to all the path). --outputdir <outputdir> Location of the directory to store the job output data from HDFS. The HDFS directory will have the same name. Thus, if this option is used, the job script has to specify the name of the directory (not to all the path). -hdfsdir <hdfsdir> Location of the HDFS directory to use in the machines. If not provided /tmp/ will be used. |

|

| cloudinstanceslist <options> | List the information of the instance/s submitted to the selected cloud. Options -i/--instance [InstanceId/s] Id of the instance to check status. This is optional, if not provided all instances will be listed. -e/--euca <SiteName> Select the Eucalyptus Infrastructure located in SiteName (india, sierra...). -s/--openstack <SiteName> Select the OpenStack Infrastructure located in SiteName (india, sierra...). -n/--nimbus <SiteName> Select the Nimbus Infrastructure located in SiteName (india, sierra...). -o/--opennebula <SiteName> Select the OpenNebula Infrastructure located in SiteName (india, sierra...). -v/--varfile <VARFILE> Path of the environment variable files. Currently this is used by Eucalyptus, OpenStack and Nimbus. |

|

| hpcjobslist [job/s] | List the information of the HPC job/s. | |

| cloudinstancesterminate <options> | Terminate instance/s from the selected cloud. You can specify a list of instances ids and also reservations ids. Options -i/--instance <InstanceId/s> Id/s of the instance/s or reservation/s to terminate. -e/--euca <SiteName> Select the Eucalyptus Infrastructure located in SiteName (india, sierra...). -s/--openstack <SiteName> Select the OpenStack Infrastructure located in SiteName (india, sierra...). -n/--nimbus <SiteName> Select the Nimbus Infrastructure located in SiteName (india, sierra...). -o/--opennebula <SiteName> Select the OpenNebula Infrastructure located in SiteName (india, sierra...). -v/--varfile <VARFILE> Path of the environment variable files. Currently this is used by Eucalyptus, OpenStack and Nimbus. |

|

| hpcjobsterminate <job/s> | Terminate HPC job/s. |

Context Usage

Show list of available contexts

$ fg-shell -u jdiaz

fg> contexts

The output shows all available contexts

FG Contexts:

------------

repo

image

rain

hadoop

Users can select any of the previous contexts with the use command. Then, the environment of this particular context is initialized.

fg> use repo

fg-repo>

Return to the normal context

fg-repo> use

fg>

Help Usage

List available commands in the generic context

$ fg-shell -u jdiaz

fg> help

The output shows the list of generic commands and the list of commands that are available in each of the contexts. Note that the commands listed for each context are only available when that particular context has been loaded. Some contexts load other contexts as part of their requirements, as we explained before.

A complete manual can be found in https://portal.futuregrid.org/man/fg-shell

Generic Documented commands (type help <topic>):

================================================

contexts history load py save setpasswd use

exec historysession manual quit script shortcuts

help li pause run set show

Image Repository commands. Execute "use repo" to use them. (type help <topic>):

===============================================================================

get histimg histuser list modify put remove setpermission user

Image Management commands. Execute "use image" to use them. (type help <topic>):

================================================================================

cloudlist cloudlistkernels generate hpclist hpclistkernels register

FG Dynamic Provisioning commands. Execute "use rain" to use them. (type help <topic>):

======================================================================================

launch launchhadoop

Please select a CONTEXT by executing use <context_name>

Execute 'contexts' command to see the available context names

List available commands in the image context (this contexts also loads the repo contexts)

fg> use image

fg-image> help

The output is something like this.

A complete manual can be found in https://portal.futuregrid.org/man/fg-shell

General documented commands (type help <topic>):

================================================

contexts history load py save setpasswd use

exec historysession manual quit script shortcuts

help li pause run set show

Specific documented commands in the repo context (type help <topic>):

=====================================================================

get histimg histuser list modify put remove setpermission user

Specific documented commands in the image context (type help <topic>):

======================================================================

cloudlist cloudlistkernels generate hpclist hpclistkernels register

General Shell Usage

Session example where we get an image, list all the images which os is centos, add an user and activate it.

$ fg-shell

fg> use repo

fg-repo> get image123123123

fg-repo> list * where os=centos

fg-repo> user -a javi

fg-repo> user -m javi status active

Record the executed commands in an script.

$fg-shell

fg> script myscript.txt

fg> use repo

fg-repo> put /tmp/image.img vmtype=xen & imgtype=opennebula & os=linux & arch=x86_64

fg-repo> list

fg-repo> script end

use repo put /tmp/image.img vmtype=xen & imgtype=opennebula & os=linux & arch=x86_64 list

Execute shell commands stored in a file. Then exits from the shell

$ cat myscript.txt| fg-shell

Execute shell commands stored in a file from the shell. This stay in the shell.

$ fg-shell -u jdiaz

fg> load myscript.txt

Note

These tools require users to authenticate using their usernames and portal passwords.

In this section you will find information on software deployment details.

You can install the FutureGrid Rain using one of the following procedures.

This option has the following prerequisites:

If you meet these prerequisites, you should be able to install FutureGrid Rain simply by running this as root:

easy_install -U futuregrid

If you are using Ubuntu or Mac OS X, you will likely just need to run this:

sudo easy_install -U futuregrid

If you do not have administrative privileges on your machine, you will have to install FutureGrid Rain under your regular user account:

easy_install -U futuregrid --user

Note

Installing FutureGrid Rain in your home directory will install the FutureGrid Rain commands in ~/.local/bin, which may not be in your PATH environment variable. If not, make sure to update the definition of your PATH environment variable (e.g., in the ~/.profile file if you are using a BASH shell).

Alternatively, you can also request that the commands be installed in a directory that is already in your $PATH. You may want to use ~/bin/, as most Linux distributions will automatically include that directory in your PATH.

easy_install -U futuregrid --user -s ~/bin/

If you do not have Python Distribute, or are unable to install it, you can still install FutureGrid Rain by downloading a source tarball yourself. This tarball contains an installation script that will install and setup Python Distribute, and then proceed to install FutureGrid Rain.

You will first have to download the latest source tarball from the Python Package Index: http://pypi.python.org/pypi/futuregrid

Next, untar the tarball and run the installation script as root:

tar xvzf futuregrid-1.0.1.tar.gz cd futuregrid-1.0.1 python setup.py install

Note

If you are using Ubuntu or Mac OS X, you will likely just need to run this:

sudo python setup.py install

If you do not have administrative privileges on your machine, you can choose to install everything inside your home directory:

python setup.py install --user

If you want to use the latest version of our code from our GitHub repository, the steps are similar to installing a source tarball. However, instead of downloading a tarball, you will use git to clone our repository on your machine. Simply run the following:

git clone git@github.com:futuregrid/rain.git

This will create a directory called rain. In it, you will find the same setup.py script described in the previous section. If you want to install FutureGrid Rain, and not make any modifications to the code, you should run python setup.py install as described in the previous section.

If you intend to modify the code, and want the FutureGrid Rain commands to use the code in the git repository you’ve created on your machine, you can instead install FutureGrid Rain in “developer” mode:

python setup.py develop

This will install FutureGrid Rain but, instead of copying the Python source code to a system directory, it will create a pointer to the source directory you checked out. That way, any changes you make to the source code will take effect immediately (without having to reinstall FutureGrid Rain).

Take into account that there are, at least, two branches in our GitHub repository: master and dev. The former always contains the latest stable release, including bug fixes, and the former contains the very latest version of our code (which may not work as reliably as the code in the master branch). By default, your repository will track the master branch. To switch to the dev branch, run the following:

git checkout dev

To pull the latest changes from our GitHub repository, run the following:

git pull origin

There are two places where we can locate the configuration files. Our software will look into these places in the following order:

If you have installed FutureGrid Rain using the tarball file (Using a source tarball) you will find the configuration sample files in /etc/futuregrid/. Otherwise, you can download them as a tarball or a ZIP file.

Server Side: The configuration file has to be renamed as fg-server.conf.

Client Side: The configuration file has to be renamed as fg-client.conf.

Note

If you configure several clients or servers in the same machine, the fg-client.conf or fg-server.conf must be the same file.

Note

In the Client Side, the path of the log files must be relative to each users. Using the $HOME directory is a good idea.

The authentication of our software is based on LDAP. So, we need to configure some options in the configuration files to make it possible.

We need to configure the [LDAP] section. This is going to be use by all servers. More information about this section of the server configuration file can be found in LDAP section.

[LDAP] LDAPHOST= ldap.futuregrid.org LDAPUSER= uid=rainadmin,ou=People,dc=futuregrid,dc=org LDAPPASS= passwordrainadmin log= ~/fg-auth.log

We need to configure the [LDAP] section. This is going to be use by the FutureGrid Shell. This allows the shell to store your encrypted password once it has been validated. In this way, you won’t have to type to password again during that session. More information about this section of the client configuration file can be found in LDAP section.

[LDAP] LDAPHOST=ldap.futuregrid.org log=~/fg-auth.log

This server will contain configuration files and kernel files that are needed by the different components of the FutureGrid software.

Setting up an Apache server.

sudo yum install httpd

or

sudo apt-get install apache2

sudo /etc/init.d/httpd start

Copy all configuration files into /var/www/html/ or the directory specified in httpd.conf if you are not using the default options. The configuration files are in the FutureGrid private svn.

In this section we explain how to configure the Image Repository.

In the Server side we need to configure several sections. The main one is the [RepoServer] and we have to create another section with the of the backend system that we want to use (see RepoServer section). Our image repository support different backends that are described in the next table:

Backend option Storage for Image Files Storage for Metadata mysql Posix Filesystem MySQL mongodb MongoDB MongoDB swiftmysql Swift MySQL swiftmmongo Swift MongoDB cumulusmysql Cumulus MySQL cumulusmmongo Cumulus MongoDB

Note

Installation instructions for the software to be used as storage backend can be found in Installing Image Repository Backends

Our predefined option is cumulusmongo. Thus, the [RepoServer] section looks like:

[RepoServer] port = 56792 proc_max = 10 refresh = 20 nopasswdusers = testuser:127.0.0.1,127.0.0.2; testuser2:127.0.0.1 backend = cumulusmongo log = ~/reposerver.log log_level = debug ca_cert= /etc/futuregrid/certs/imdserver/cacert.pem certfile= /etc/futuregrid/certs/imdserver/imdscert.pem keyfile= /etc/futuregrid/certs/imdserver/privkey.pem restConfFile = /etc/futuregrid/fg-restrepo.conf

Note

You may need to configure the iptables to open the port specified in the port option to allow the communication with the client.

Since we have specified backend = cumulusmongo, we also have to add a section named [cumulusmongo] (see Backend Example Section)

[cumulusmongo] address = localhost:23000 userAdmin = configfile = addressS = 192.168.1.2 userAdminS = PgkhmT23FUv7aRZND7BOW configfileS = /etc/futuregrid/cumulus.conf imgStore =/temp/

The imgStore directory is where the images are uploaded to the server via ssh. This is a temporal directory for all the different backends but the mysql one. The permission of this directory must be 777 to allow everyone to upload images. Moreover, when this is used as temporal directory, the bit t must be disabled because the user that executes the server (i.e. imageman) must be able to remove the images from the temporal directory after it has been uploaded to the final destination. By default any directory that you creates has this bit disabled. However, the /tmp directory existing in your system has this bit enabled.

The files specified in the configfile and configfileS options contain the password of the services. These files look like:

[client] password=complicatedpass

In case we want to use a different configuration, we may need to install the python modules to support that.

MySQL (MySQL has to be installed before you install the python module)

sudo easy_install MySQL-pythonSwift

sudo easy_install python-cloudfiles

Additionally, if we want to configure the Rest Interface Server, we need to specify the option restConfFile in [RepoServer] Section to identify its configuration file. In this configuration file we need to specify the information about the Rest Interface. A simple configuration file is:

[global] log.error_file = 'cherrypy.error.log' log.accessfile = 'cherrypy.access.log' server.socket_host = "0.0.0.0" server.socket_port = 8443 server.thread_pool = 10 server.ssl_module="builtin"

To enable https, we need to install pyopenssl,

sudo easy_install python-cloudfiles or sudo apt-get/yum install python-openssl

have x509 certificates and modify the configuration file:

[global] log.error_file = 'cherrypy.error.log' log.accessfile = 'cherrypy.access.log' server.socket_host = "0.0.0.0" server.socket_port = 8443 server.thread_pool = 10 server.ssl_module="pyopenssl" server.ssl_certificate="server.crt" server.ssl_private_key="server.key"

Once you have the configuration files ready and the backend software installed, you can start the image repository and the rest interface servers by executing IRServer.py and IRRestServer.py respectively.

Note

We recommend to have a system user that run all the servers. In this way it will be easier to manage the sudoers file when necessary.

In the client side, we need to configure the [Repo] section. More information about this section of the client configuration file can be found in Repo section.

[Repo] port = 56792 serveraddr=localhost log=~/clientrepo.log log_level=debug ca_cert=/opt/futuregrid/futuregrid/etc/imdclient/cacert.pem certfile=/opt/futuregrid/futuregrid/etc/imdclient/imdccert.pem keyfile=/opt/futuregrid/futuregrid/etc/imdclient/privkey.pem

Once you have everything set up, you need to create the users in the image repository. Although users are managed in the LDAP server, the image repository also maintain a database with users to control user’s access, quotas, store statistics, etc. This database is also used by the rest of the framework The first user that you create will have the admin role by default. In this way, you can create more users. The command to add an user is:

fg-repo --useradd <userid>

The executable file of this client is fg-repo. More information about how to use the image repository can be found in the Image Repository Manual.

Note

The userid created in the image repository must be the same that in LDAP.

| Server Side (fg-server.conf) | Client Side (fg-client.conf) | |

|---|---|---|

| Access to |

|

|

| Configure |

|

|

| Executables |

|

|

In this section we explain how to configure the Image Generator

In the Server side we need to configure the [GenerateServer] Section (see GenerateServer section).

[GenerateServer] port = 56791 proc_max = 5 refresh = 20 wait_max = 3600 nopasswdusers = testuser:127.0.0.1,127.0.0.2;testuser2:127.0.0.1 vmfile_centos = 5:/srv/cloud/one/share/examples/centos5_context.one,6:/srv/cloud/one/share/examples/centos6_context.one vmfile_rhel = vmfile_ubuntu = /srv/cloud/one/share/examples/ubuntu_context.one vmfile_debian = xmlrpcserver = http://localhost:2633/RPC2 bridge = br1 addrnfs = 192.168.1.6 tempdirserver = /srv/scratch/ tempdir = /media/ http_server = http://fg-gravel.futuregrid.edu/ oneuser = oneadmin onepass = f8377c90fcfd699f0ddbdcb30c2c9183d2d933ea log = ~/fg-image-generate-server.log log_level=debug ca_cert=/opt/futuregrid/futuregrid/etc/imdserver/cacert.pem certfile=/opt/futuregrid/futuregrid/etc/imdserver/imdscert.pem keyfile=/opt/futuregrid/futuregrid/etc/imdserver/privkey.pem

Note

You may need to configure the iptables to open the port specified in the port option to allow the communication with the client.

As we described in the Image Generation Section, the Image Generator is supported by a IaaS cloud. Currently, we use OpenNebula for this purpose. Therefore, it is a requirement to have an OpenNebula cloud installed and configured with at least one compute node. Additionally, you need to have the VMs that will be used to generate the images and the templates. The VM templates are specified in the

#--------------------------------------- # VM definition #--------------------------------------- NAME = "centos5" CPU = 1 MEMORY = 1024 OS = [ arch="x86_64" ] DISK = [ source = "/srv/cloud/images/centos-5.6c1.img", target = "hda", readonly = "no" ] NIC = [ NETWORK_ID=0] NIC = [ NETWORK_ID=1] FEATURES=[ acpi="no" ] CONTEXT = [ files = "/srv/cloud/images/centos/init.sh /srv/cloud/images/imageman_key.pub", target = "hdc", root_pubkey = "imageman_key.pub" ] GRAPHICS = [ type = "vnc", listen = "127.0.0.1" ]

Configure the scratch directory specified in the tempdirserver option. For that, we need to export via NFS the directory to allow the VMs to mount as scratch disk. Assuming that the tempdirserver option is /srv/scratch and the subnet is 192.168.1.0/24, the configuration steps are:

Install NFS support

sudo apt-get install nfs-common

or

sudo yum install nfs-utils

Create directories

sudo mkdir -p /srv/scratch

sudo chmod 777 /srv/scratch

Export directories. Edit /etc/exports file to insert the following line:

/srv/scratch 192.168.1.*(rw,async,no_subtree_check,no_root_squash) 192.168.1.*(rw,async,no_subtree_check,no_root_squash)

Refresh NFS server

sudo exportfs -r

Configure user that is going to execute the server. Let’s assume that the name of this user is imageman:

Configure ssh to don’t check the host id. This is needed for login into the VMs because the same IP will be associated to different VMs over time. So, we need to edit the $HOME/.ssh/config file to insert the next lines. The permissons of this file is 644.

Host *

StrictHostKeyChecking no

Edit sudoers file by executing visudo as root user and add the following lines:

imageman ALL=(ALL) NOPASSWD: /usr/bin/python *

imageman ALL=(ALL) NOPASSWD: /usr/sbin/chroot *

imageman ALL=(ALL) NOPASSWD: /bin/mount *

imageman ALL=(ALL) NOPASSWD: /bin/umount *

Configure the Image Repository client because the Image Generation must be able to retrieve and upload images to the repository. See Setting up Image Repository Client. The imageman user must be able to ssh the Image Repository Server machine without introducing password or passphrase. Therefore, we need to put the imageman public key in the authorized_keys of the machine where the Image Repository Server is running.

Once everything is set up you can start the server by execution IMGenerateServer.py as imageman user.

In the client side, we need to configure the [Generation] section. More information about this section of the client configuration file can be found in Repo section.

[Generation] serveraddr = fg-gravel.futuregrid.edu port = 56791 log=~/clientgen.log log_level=debug ca_cert=/opt/futuregrid/futuregrid/etc/imdclient/cacert.pem certfile=/opt/futuregrid/futuregrid/etc/imdclient/imdccert.pem keyfile=/opt/futuregrid/futuregrid/etc/imdclient/privkey.pem

The executable file of this client is fg-generate. More information about how to use the image generation can be found in the Image Generation Manual.

| Server Side (fg-server.conf) | Client Side (fg-client.conf) | |

|---|---|---|

| Access to |

|

|

| Configure |

|

|

| Executables |

|

|

In this section we explain how to configure the Image Registrator for Cloud and HPC infrastructures.